DolphinAttack: inaudible voice commands allows attackers to control Siri, Alexa and other digital assistants

A research about supersonic voice command hacking

Chinese researchers have discovered a vulnerability in voice assistants from Apple, Google, Amazon, Microsoft, Samsung, and Huawei.

Using a technique called “DolphinAttack”, a team from Zhejiang University translated typical vocal commands into ultrasonic frequencies that are too high for the human ear to hear, but perfectly decipherable by the microphones and software powering our always-on voice assistants.

How it works?

Frequencies greater than 20 kHz are completely inaudible to the human ear.

Generally, most audio-capable devices, such as smartphones, also are designed in such a manner as to automatically filter out audio signals that are greater than 20 kHz.

All of the components in a voice capture system in fact, are designed to filter signals that are out of the range of audible sounds which is typically between 20 Hz to 20 Khz, the researchers said.

As a result, it was generally considered almost impossible until now to get a speech-recognition system to make sense of sounds that are inaudible to humans,

they noted.

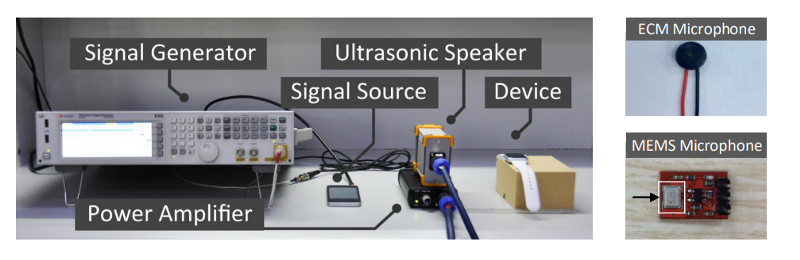

Using an external battery, an amplifier, and an ultrasonic transducer, the team was able to send sounds which the voice assistants’ microphones were able to pick up and understand from up to 170cm away, a distance that an attacker could likely achieve without raising too much suspicion.

Here a video of the PoC:

[embed]https://www.youtube.com/watch?v=21HjF4A3WE4[/embed]

Defenses

Researchers proposes both software and hardware defense strategies.

Hardware-Based Defense

- Microphone Enhancement: microphones shall be enhanced and designed to suppress any acoustic signals whose frequencies are in the ultrasound range.

- Inaudible Voice Command Cancellation: add a module prior to LPF to detect the modulated voice commands and cancel the baseband with the modulated voice commands.

Software-Based Defense

Software-based defense looks into the unique features of modulated voice

commands which are distinctive from genuine ones. In particular, a machine learning based classifier could detect them.