Some useful tools for finding unsecure Google Storage Buckets

And some suggestion to hardening your buckets!

Google Storage Buckets is a service similar to S3 Buckets and, like the must know Amazon's service, has the same security problems related to uncorrect configurations.

Also Google Buckets may expose sensitive data when the owners failed to configure security rules, making the publicily accessible.

Today i'd like to share a couple of tools useful for vulnerability assessment on Google Cloud Buckets.

GCPBucketBrute

A script to enumerate Google Storage buckets starting from a keyword (used to generate a list of permutation for the search process) that determine what access you have to them, and determine if they can be privilege escalated.

How it works

- Given a keyword, this script enumerates Google Storage buckets based on a number of permutations generated from the keyword.

- Then, any discovered bucket will be output.

- Then, any permissions that you are granted (if any) to any discovered bucket will be output.

- Then the script will check those privileges for privilege escalation (storage.buckets.setIamPolicy) and will output anything interesting (such as publicly listable, publicly writable, authenticated listable, privilege escalation, etc).

Installation

git clone https://github.com/RhinoSecurityLabs/GCPBucketBrute.gitcd GCPBucketBrute/pip3 install -r requirements.txtorpython3 -m pip install -r requirements.txt

Datadrifter

Spyglass Security Datadrifter is an online tool that finds Google Cloud buckets left open.

By logging in with a Google account, it is possible to carry out (with the free account) simple searches in the set of badly configured bucket scanned by the crawler.

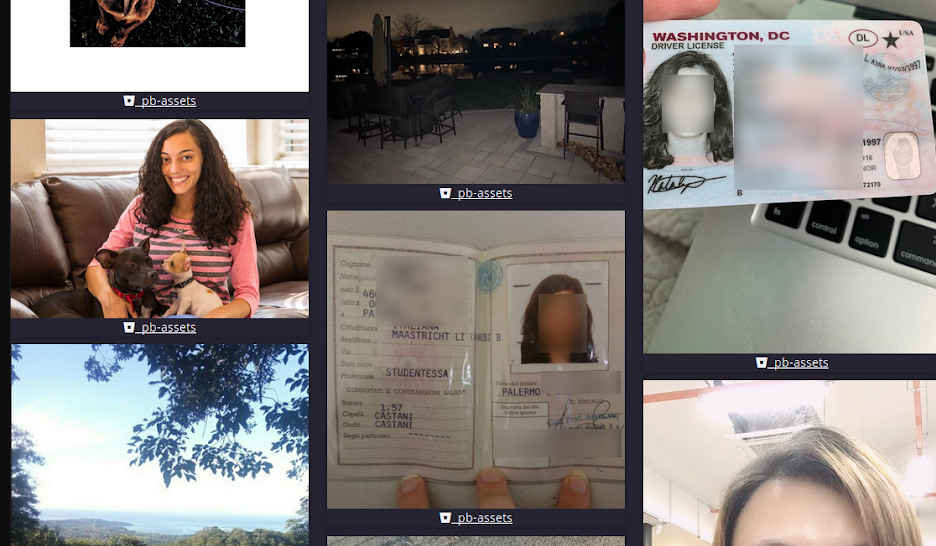

The paid version allows you to see much more, for example images of personal documents, screenshots of purchase transactions, with names, surnames, dates and amounts.

How can i avoid this kind of leak?

Google published on its knowledge base some useful tips:

Check for appropriate permissions

The first step to securing a Cloud Storage bucket is to make sure that only the right individuals or groups have access. By default, access to Cloud Storage buckets is restricted, but owners and admins often make the buckets or objects public. While there are legitimate reasons to do this, making buckets public can open avenues for unintended exposure of data, and should be approached with caution.

Check for sensitive data

Even if you’ve set the appropriate permissions, it’s important to know if there’s sensitive data stored in a Cloud Storage bucket. Enter Cloud Data Loss Prevention (DLP) API.

Take Action

If you find sensitive data in buckets that are shared too broadly, you should take appropriate steps to resolve this quickly. You can:

- Make the public buckets or objects private again

- Restrict access to the bucket (see Using IAM)

- Remove the sensitive file or object from the bucket

- Use the Cloud DLP API to redact sensitive content

Stay vigilant!

Protecting sensitive data is not a one-time exercise. Permissions change, new data is added and new buckets can crop up without the right permissions in place. As a best practice, set up a regular schedule to check for inappropriate permissions, scan for sensitive data and take the appropriate follow-up actions.